Claude AI

Claude AI is a family of large language models developed by Anthropic, an artificial intelligence research and deployment company focused on AI safety, reliability, and alignment.

Claude AI is designed to generate, analyze, and summarize natural language, with particular emphasis on handling long and complex inputs such as legal contracts, technical documentation, research papers, and policy materials. As of 2026, Claude AI is used primarily in enterprise, research, and professional environments.

Generative AI and approach

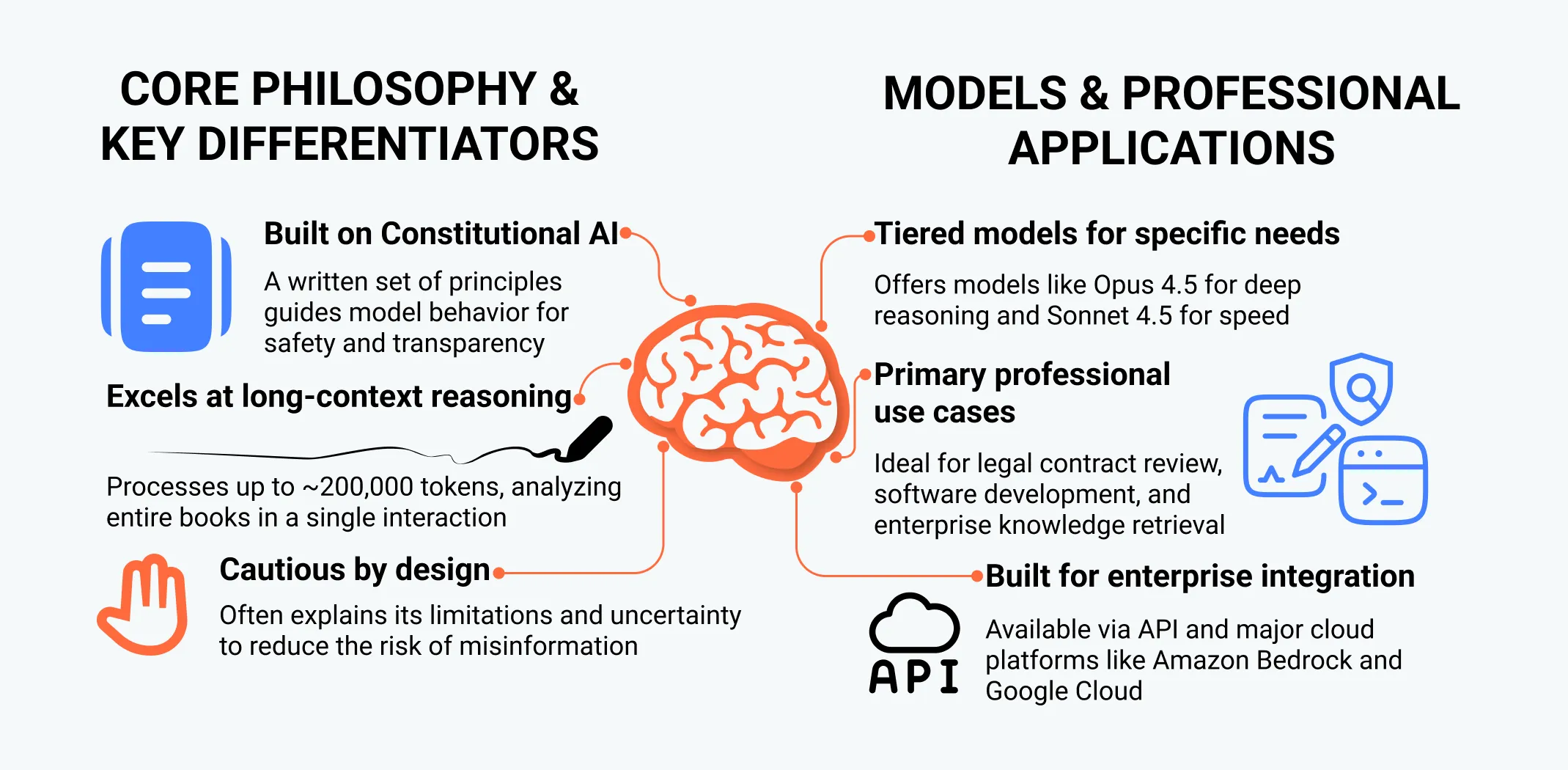

Claude AI belongs to the category of generative artificial intelligence systems, meaning it produces original text based on statistical patterns learned during training rather than retrieving predefined answers. The system is often described as comparatively cautious in tone, frequently acknowledging uncertainty and explaining limitations. These characteristics reflect deliberate design decisions rather than technical constraints.

Claude AI was developed during a period of rapid advancement in large language models, alongside systems such as OpenAI’s ChatGPT and Google’s Gemini. As model capabilities increased, concerns regarding misinformation, unsafe outputs, and lack of transparency also grew.

Anthropic positioned Claude AI to address these concerns by emphasizing predictability, alignment, and long-context reasoning. This approach prioritizes safety and usefulness over benchmark performance alone.

Claude AI is commonly used for document analysis, enterprise knowledge retrieval, legal and compliance review, software development assistance, and professional writing. One of its distinguishing features is the ability to process large volumes of text in a single interaction.

By 2025, certain Claude configurations supported context windows of up to approximately 200,000 tokens. This enabled analysis of entire books or large document collections in a single session.

Claude AI is also closely associated with Anthropic’s research into AI governance and safety, particularly through the development of an approach known as Constitutional AI. This methodology aims to incorporate explicit guiding principles into model training and behavior. As a result, Claude AI functions both as a practical language tool and as an example of safety-oriented AI system design.

History and development

Founding of Anthropic (2021)

The development of Claude AI is closely linked to the founding of Anthropic in 2021. The company was established by former researchers and engineers from OpenAI and other organizations, many of whom expressed concerns that the capabilities of large language models were advancing more rapidly than methods for ensuring their safety, reliability, and alignment with human values.

Early research models

In its early stages, Anthropic focused primarily on foundational research rather than consumer-facing products. Initial versions of Claude were developed as internal research models used to explore alignment techniques, interpretability, and safety mechanisms.

These early models were deployed through limited partnerships, allowing Anthropic to observe model behavior in real-world settings, particularly when responding to ambiguous, adversarial, or potentially harmful prompts.

Claude 2 (2023)

Claude gained broader public attention with the release of Claude 2 in 2023. This version introduced notable improvements in reasoning ability, coding assistance, and long-context processing. Its expanded capacity to analyze lengthy documents contributed to early adoption among legal, academic, and enterprise users, distinguishing it from many contemporaneous language models optimized for shorter interactions.

Claude 3 and 3.5 (2024–2025)

In early 2024, Anthropic released the Claude 3 family, which introduced multiple model variants – Opus, Sonnet, and Haiku – designed to address different performance, latency, and cost requirements. This marked a shift toward a more tiered and product-oriented strategy.

Further refinements were introduced in mid-2024 with the release of Claude 3.5, which improved reasoning performance, multimodal understanding, and tool-use capabilities, and expanded support for longer context windows.

By late 2025, Claude 3.5 models were widely deployed in enterprise environments. During this period, Anthropic reduced the frequency of detailed public technical disclosures, reflecting a broader transition from an early-stage research emphasis toward commercial maturity and large-scale deployment.

Claude 4 family and enterprise tools

In late 2025, Anthropic introduced a new generation of Claude models, starting with Claude Sonnet 4.5 and, later, Opus 4.5, representing a further stage in the system’s evolution. These models emphasized:

- Advanced coding performance;

- Long-horizon reasoning;

- Agentic tool use for complex, multi-step workflows.

Building on Claude 3.5, the Claude 4 family expanded support for extended reasoning and computer interaction. It also added tighter integration with IDEs and cloud platforms.

Alongside the release of the new models, Anthropic introduced additional developer-focused infrastructure, including an Agent SDK and enhanced API features for memory and context management.

These tools support long-running, stateful agents built on Claude. They enable complex workflows with persistent context, task delegation, and iterative tool use.

Description

Claude AI is a general-purpose large language model designed to assist users with language-based tasks that require understanding, reasoning, and structured communication. At a technical level, Claude generates text by predicting likely continuations based on patterns learned during training.

In practice, it functions as a conversational system capable of answering questions, analyzing documents, generating written content, and supporting complex workflows.

A defining characteristic of Claude AI is its emphasis on long-context reasoning. Unlike systems optimized primarily for short prompts, Claude is designed to handle extensive inputs. For example, users may submit entire legal contracts or multi-chapter reports and request summaries, risk identification, or cross-section analysis. Claude can reference earlier portions of the input while analyzing later sections, improving coherence in long-form tasks.

Claude AI is used by a range of user groups. Individual users often rely on it for writing assistance, learning support, and research summaries. Professionals use it to draft reports, analyze text-based data, and review technical documentation. Enterprises integrate Claude into internal tools, customer support systems, and compliance workflows through application programming interfaces (APIs).

Claude AI does not possess consciousness, intentions, or understanding in a human sense. It does not have independent knowledge of facts and may generate incorrect or misleading information. Users are advised to evaluate outputs critically, particularly in high-stakes contexts such as legal, medical, or financial decision-making.

Claude AI also differs from traditional search engines. While some deployments allow retrieval from external sources, Claude does not inherently verify information against the internet unless explicitly configured to do so. This distinction affects how its responses should be interpreted and validated.

How Claude AI works

Claude AI is built using a transformer-based neural network architecture, which enables the model to analyze relationships between words across large spans of text. This architecture allows Claude to consider broad context rather than interpreting sentences in isolation.

During training, Claude was exposed to a mixture of publicly available text, licensed data, and content created by human trainers. The objective was not memorization of specific documents but learning patterns of language, reasoning, and explanation across domains such as law, science, and general communication.

A central element of Claude’s training methodology is Constitutional AI. Instead of relying exclusively on human feedback, Anthropic defined a written set of principles intended to guide acceptable and unacceptable model behavior. During training, Claude generates responses and evaluates them against these principles, revising outputs that violate guidelines related to harm, honesty, or misuse.

For example, when a generated response provides unsafe or misleading advice, the system is trained to identify why the response violates its principles and to produce an alternative. Over time, this process shapes the model’s behavior. As a result, Claude often explains the reasons for refusing certain requests rather than issuing a simple denial.

By 2025, Claude 3.5 models also demonstrated improved tool-use capabilities. When integrated into enterprise environments, Claude can interact with external systems such as databases, calculators, or retrieval engines. This allows it to combine language reasoning with structured data for tasks such as financial analysis or document lookup.

Claude’s large context window remains a distinguishing feature. With support for up to approximately 200,000 tokens in some configurations, Claude can analyze extensive datasets or long conversations without losing track of earlier information. This capability is particularly relevant for professional users, though it increases computational cost and requires careful prompt design.

Models and capabilities

As of early 2026, the Claude AI ecosystem is centered on the latest generation of Claude models, including Claude Opus 4.5 and Claude Sonnet 4.5, which serve as the primary flagship models for most new enterprise deployments.

Earlier generations, including Claude 3 and Claude 3.5, remain in active production use but are less commonly selected for new greenfield deployments, and are more often retained for legacy systems or cost-sensitive scenarios.

Opus 4.5

Claude Opus 4.5 represents the highest-capacity tier of the Claude model lineup. It is positioned for tasks requiring deep reasoning, multi-step analysis, and structured synthesis across large or complex inputs. Common use cases include reviewing extensive legal contracts, analyzing technical specifications, and synthesizing information across multi-section reports or document collections.

Opus-class models support very large context windows. Depending on configuration, some deployments support extended contexts exceeding 100,000 tokens, with certain offerings supporting approximately 200,000 tokens or more. These capabilities enable analysis of lengthy documents or prolonged interactions within a single session.

However, extremely long or poorly structured inputs may still lead to incomplete, inconsistent, or erroneous outputs, reflecting known limitations of transformer-based models when operating at the upper bounds of context length.

Sonnet 4.5

Claude Sonnet 4.5 occupies a mid-tier position within the Claude family, balancing reasoning capability, response latency, and cost efficiency. It is commonly used for drafting and editing documents, summarizing meetings, answering internal knowledge queries, and assisting with software development tasks such as code generation and documentation.

Sonnet 4.5 is frequently selected for large-scale or high-volume deployments where the maximum reasoning depth of Opus 4.5 is not required. Its relatively lower computational cost makes it suitable for routine operational workflows, though adoption patterns vary by organization, industry, and performance requirements.

Multimodal capabilities

Both Opus 4.5 and Sonnet 4.5 support multimodal inputs, particularly for analyzing documents that combine text with tables, charts, diagrams, and static images. Users may submit reports, presentations, or scanned documents and request summaries, explanations, or data extraction.

While multimodal performance has improved in recent Claude generations, outputs remain probabilistic. Claude may misinterpret complex visuals, ambiguous layouts, or low-quality images, and verification is recommended for critical or high-stakes applications.

Tool use and integration

Higher-capacity Claude models can be configured to interact with external tools through a tool-calling interface. These tools include:

- Calculators and computational tools;

- Code execution environments;

- Retrieval systems and structured databases;

- Enterprise-specific APIs and internal software systems.

Tool use and integration are deployment-specific and require careful system design, access control, and monitoring. The reliability of outputs depends on both the underlying model and the correctness of connected tools. Misconfiguration or insufficient oversight can lead to incorrect results or unintended behavior, particularly in automated or agentic workflows.

Comparison with other large language models

As of late 2025 and early 2026, Claude AI operates in a competitive landscape alongside OpenAI’s ChatGPT and Google’s Gemini models. Each system offers advanced reasoning, multimodal capabilities, and varying levels of real-time integration. Direct comparisons depend heavily on task, configuration, and deployment context.

Claude’s flagship models are often reported to excel at long-context analysis. They handle large documents such as legal contracts, technical manuals, and multi-section reports. Enterprise users frequently cite consistency over long inputs as a key advantage, though these observations are based on reported usage rather than standardized benchmarks.

Recent ChatGPT releases are often noted for broad ecosystem features, including customizable models, plugin support, and optional live web access. In some evaluations, it performs strongly in creative or speculative tasks and in workflows requiring real-time information, though performance varies by configuration.

Google’s latest Gemini Pro models are commonly described as strong in multimodal tasks involving images, video, and real-time data, leveraging integration with Google’s ecosystem. Claude’s multimodal capabilities focus primarily on structured documents and static images rather than native audio or video processing.

Enterprises often characterize Claude as reliable and alignment-focused, particularly for compliance-sensitive applications. These characterizations reflect reported perceptions rather than objectively verified metrics, and performance varies by use case.

Use cases

Claude AI is widely used across industries because it addresses a common problem: making sense of large amounts of complex information. Its long-context capabilities and structured responses make it especially suitable for professional and enterprise environments. Common applications include:

- Business operations: Acts as an internal knowledge assistant for company policies, technical manuals, and internal guidelines. Example: HR teams can query benefits policies or compliance requirements.

- Legal and compliance: Helps review contracts, summarize regulations, and identify potential risks. Provides initial overviews that reduce time spent on detailed analysis.

- Software development: Assists with code generation, debugging, documentation, and understanding legacy systems. Developers can paste multiple files and query how they interact.

- Education and research: Explains complex concepts, summarizes academic papers, and structures essays. Supports learning rather than replacing independent thinking.

- Creative and professional writing: Used for drafting, editing, and ideation. Valued for clarity and coherence, though generally less imaginative than some competitors.

Safety, ethics, and alignment

Safety and ethical alignment are central design considerations in Claude AI’s development rather than secondary features applied after deployment. Anthropic’s approach emphasizes embedding behavioral constraints directly into model training, an approach referred to as Constitutional AI. This methodology is intended to guide how the model evaluates and revises its own outputs, reducing reliance on continuous human moderation.

Core ethical principles

At the core of Constitutional AI is a written set of principles that define acceptable and unacceptable behavior. These principles include:

- Avoid physical harm;

- Discourage illegal or fraudulent activity;

- Respect individual autonomy;

- Be transparent about uncertainty.

During training, Claude generates candidate responses and then critiques those responses against the constitutional principles. If a response violates one or more guidelines, the system is trained to revise it, producing an alternative that better aligns with the stated rules.

This approach affects how Claude behaves in everyday interactions. Rather than issuing brief refusals, Claude often explains why a request cannot be fulfilled, referencing safety concerns or ethical constraints.

Proponents argue that this explanation-based refusal model increases transparency and helps users understand the system’s boundaries. Critics, however, contend that such explanations can feel overly cautious or instructional, particularly when the user’s intent is ambiguous or exploratory rather than harmful.

For example, researchers studying cybersecurity, public health, or risk mitigation may encounter refusals when asking about harmful scenarios for defensive or analytical purposes. While this reduces the likelihood of misuse, it can slow legitimate work and requires users to reframe requests carefully.

Challenges and limitations

Ethical alignment also influences how Claude handles uncertainty. Compared with some other large language models, Claude is more likely to acknowledge incomplete information or express uncertainty when confidence is not warranted. This behavior can reduce the likelihood of hallucinated or overly confident incorrect answers.

At the same time, it may be perceived as less decisive in environments where users expect clear, actionable guidance. In enterprise contexts, this trade-off can be beneficial or limiting depending on workflow requirements.

Bias remains a persistent challenge. Like all large language models, Claude is trained on data that reflects existing cultural, social, and linguistic biases. Anthropic applies mitigation techniques during training and evaluation, but bias cannot be fully eliminated.

Claude may reflect dominant viewpoints more strongly than minority perspectives, particularly in subjective domains such as ethics, politics, or cultural interpretation. This raises ongoing questions about whose values are encoded into AI systems and how those values should be governed.

From a regulatory and governance perspective, Claude AI is often viewed as relatively conservative in its design philosophy. This has made it attractive to organizations operating in regulated industries such as finance, healthcare, and law.

However, the effectiveness of alignment techniques at scale remains an open question. As Claude’s capabilities expand, ensuring that safety mechanisms remain effective without unduly limiting usefulness continues to be an active area of research and debate.

API and enterprise integrations

The Claude AI API is a major factor in its adoption by enterprises and developers. While many users interact with Claude through chat-based interfaces, the API enables organizations to embed the model directly into internal systems, products, and workflows. This transforms Claude from a standalone assistant into an infrastructural component within larger software environments.

Through the API, developers can submit text, documents, and images and receive structured responses programmatically. This supports use cases such as automated document classification, contract analysis, customer support triage, and report generation. Claude’s long-context capabilities allow systems to analyze complete conversation histories or large document sets rather than isolated inputs.

In 2024 and 2025, Anthropic expanded Claude’s availability through integrations with Amazon Bedrock and Google Cloud platforms.

These partnerships simplified enterprise deployment by allowing organizations to run Claude within familiar cloud environments. This enables use of existing security controls, compliance certifications, and scaling infrastructure. For many organizations, these integrations reduced barriers related to data residency and regulatory compliance.

Some enterprise deployments support retrieval-augmented generation (RAG), enabling Claude to query external document repositories or databases and incorporate retrieved information into its responses. These configurations can improve factual grounding but are partner-specific and not universally available. They also introduce additional complexity, as incorrect retrieval or misinterpretation of sources can still lead to errors.

Claude 4.x and 4.5 models also support tool-calling interfaces, allowing interaction with external systems such as calculators, code execution environments, and structured APIs. These capabilities extend Claude’s functionality beyond text generation, but they require careful access control, monitoring, and fallback mechanisms.

Misconfigured tools can introduce security risks or unintended behavior, underscoring the importance of responsible system design.

Pricing and availability

Anthropic offers Claude AI through a combination of free access tiers, paid subscriptions, and usage-based API pricing. Free tiers generally provide limited interaction, with restrictions on usage volume, response speed, or access to the most advanced models. Paid plans, such as Claude Pro, provide higher usage limits, faster responses, and access to the latest flagship models, including Claude Sonnet 4.5 (and, where available, Opus 4.5).

For developers and enterprise customers, pricing is typically based on token usage, with higher-capacity models priced at higher per-token rates. Features such as expanded context windows and multimodal processing increase computational requirements, making usage monitoring and prompt optimization important for managing costs.

In large deployments, pricing may be customized and, under specific contracts, include service-level agreements (SLAs), dedicated support, or enhanced data isolation guarantees. These enterprise features are not automatically provided to all users and depend on negotiated agreements.

The introduction of very large context limits – up to approximately 200,000 tokens in some configurations – has significantly changed how organizations use Claude. Rather than splitting documents into smaller segments, teams can analyze entire datasets in a single interaction. While this improves efficiency and coherence, it also increases the cost of individual requests, emphasizing the need for careful workflow and prompt design.

Claude AI’s availability has expanded globally, particularly through cloud partnerships. However, access may remain constrained by regional regulations, export controls, or data residency requirements, which influence how and where Claude can be deployed, particularly in government or highly regulated sectors.

Future development

The future development of Claude AI is shaped by the balance between expanding capabilities and maintaining safety and reliability. Anthropic has indicated a preference for controlled, incremental advancement rather than rapid feature expansion. This strategy reflects the view that alignment and predictability are essential for long-term adoption, particularly in enterprise and regulated environments.

Future developments for Claude AI are expected to focus on areas such as:

- Reasoning depth: Enhanced multi-step, multi-hypothesis problem-solving and improved strategic reasoning;

- Tool use: More structured and safe integration with external systems, databases, simulations, and real-time data;

- Multimodal understanding: Improved interpretation of diagrams, handwriting, mixed media, and complex documents.

Regulatory developments will likely influence Claude’s trajectory. Governments are increasingly considering rules for advanced AI systems, particularly those used in decision-making or sensitive domains. Anthropic’s emphasis on alignment may facilitate compliance, but regulatory requirements may also slow deployment or limit certain capabilities.

Conclusion

As large language models continue to shape work, research, and communication, Claude AI illustrates one approach to developing advanced systems with safety and alignment as core principles. Whether this approach becomes a dominant model for future AI development remains uncertain. Regardless, Claude has played a significant role in shaping discussions about responsible artificial intelligence.